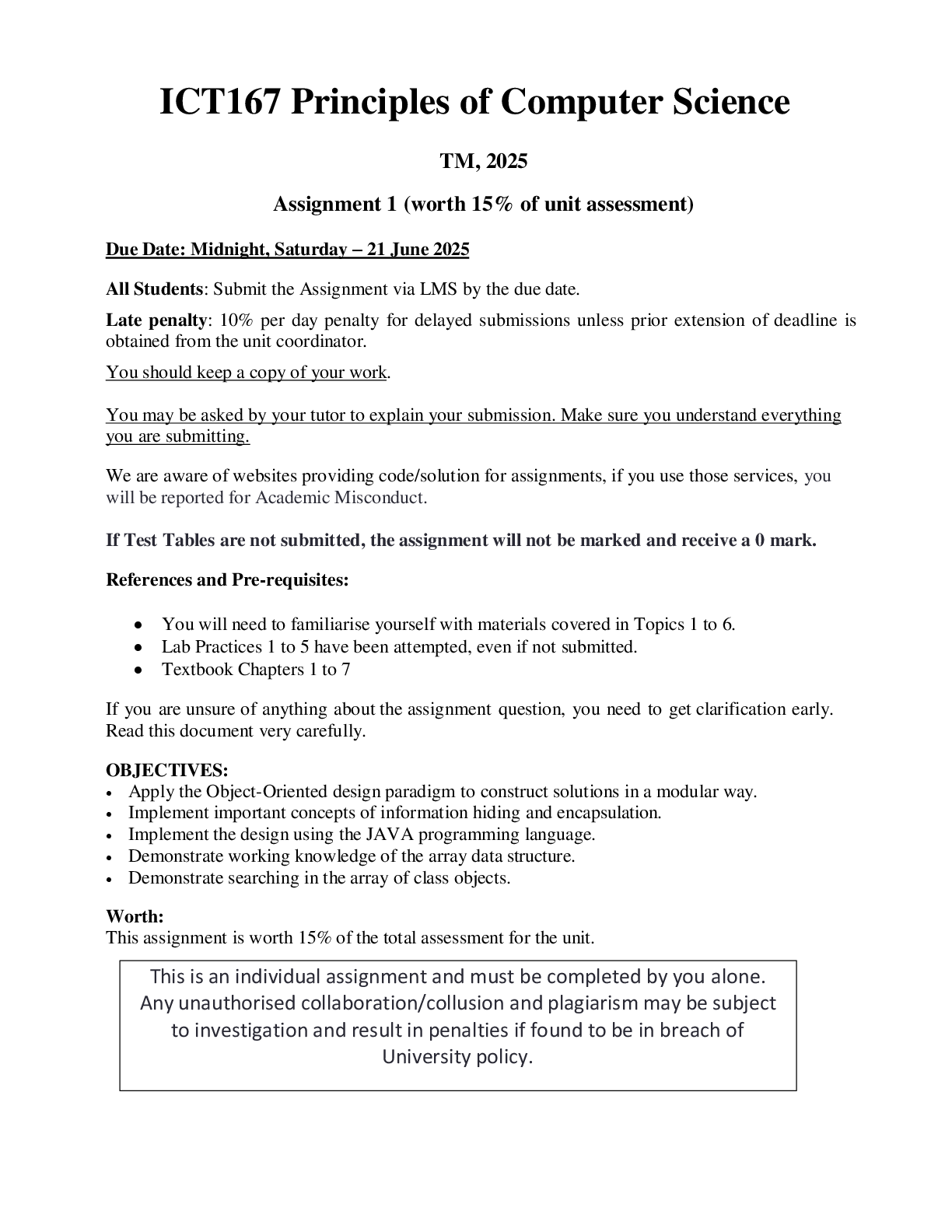

Computer Science > EXAM > CMSC 421 Final Exam | Questions and Answers (Complete Solutions) (All)

CMSC 421 Final Exam | Questions and Answers (Complete Solutions)

Document Content and Description Below

CMSC 421 Final Exam | Questions and Answers (Complete Solutions) What is Machine Learning? Field of study that gives computers the ability to learn without being explicitly programmed. What is a N ... eural Network? A neural network is a machine learning model made of layers of connected nodes (neurons) that learn patterns in data to make predictions or decisions. The three basic type of layers in a Neural Network 1) Input Layer -Where data enters the network 2) Hidden Layer -Where the network learns patters -Can be multiple hidden layers 3) Output Layer -Where the final prediction is made Name the specialized types of layers (hidden layers) in a neural network covered in lecture. Dense (Fully Connected) Layer - Every neuron connects to all neurons in the previous layer. Convolutional Layer - Detects spatial features in data (commonly used in image models). Dilated Convolution Layer - A variation of convolution that spreads out the kernel, allowing the model to capture wider context without increasing computation. Often used in image and sequence data. Deconvolutional Layer - Upsamples feature maps, often used in image generation or reconstruction. Pooling Layer - Downsamples the input, reducing spatial size and computation. Unpooling Layer - Reverses pooling to restore spatial dimensions (used in segmentation tasks). Embedding Layer - Converts discrete items (e.g., words) into continuous vectors for processing. Attention Layer -Computes the relevance or importance of different parts of the input to each other. Used in models like Transformers to capture long-range dependencies in data. Self-Attention Layer -A special type of attention where the model learns relationships between elements within the same input sequence. Crucial for models like Transformers, enabling parallel processing of input data. Below were not really covered, but are important enough to know Normalization Layer - E.g., Batch Normalization; stabilizes and speeds up training. Dropout Layer - Randomly disables neurons during training to prevent overfitting. What is Supervised Learning? A machine learning model learns from examples that include both the input data and the correct answer, so it can predict the answer for new data. In supervised learning, each example is a pair consisting of an input object (typically a vector) and a desired output value (also called the supervisory signal). A Classification problem has...? - A target variable you want to predict (called a 'class' or a 'label' - A set of historical data where that target label is known - New data where that target variable is unknown - A model What is a model? A mathematical object that takes data, where the label/class is unknown and assigns it a label/class What is classification? How does it relate to ML? The process of deciding between categories. For example: Is this person likely to pay back a loan or not. This relates to ML because, by training a model on historic data, we can estimate how likely someone is to pay back a loan. Linear Classifier for Binary Classification Formula: class = sign(w * input + w_0) w (weight) and input are vectors. w_0 is the bias term Linear Classifier for Multiclass Classification for each class: score_i = w_i⋅x + b_i Which ever class has the highest score is the predicted class What are weights? Weights determine how much influence an input feature has. How do weights relate to loss? Weights are used to reduce Loss through training and optimization techniques such as Gradient Descent What is Loss? It quantifies the error made by the model — the smaller the loss, the better the model is performing on that data. What is a hyperparameter? An argument to the model that determines how the model behaves Classification vs Regression classification: -predict class label (Labels are discrete) Ex) Predicting a movie rating as 1, 2, 3, 4, or 5 stars regression: -predict continuous quantity Ex) Predicting a movie rating as 1 through 5 stars like 4.2 What is Gradient Descent? An optimization algorithm used to minimize a loss function by iteratively adjusting the model's parameters. 1) Start with a random set of weights 2) Classify some number of points (called our batch size) 3) The gradient with respect to the weights will compute the direction and rate of steepest increase of the loss, 4) Adjust weights in the direction of the gradient that minimizes the loss. 5) Iterate until convergence. Name the 3 different kind of Loss Functions 1) Cross Entropy Loss 2) Hinge Loss 3) Squared Hinge Loss What is Cross Entropy Loss? Give the formula, it's behavior, what it does, and what it's used for Formula: -Summ(yi * log(pi)) for all classes Behavior: Penalizes confident but wrong predictions heavily. What it does: Measures how well the predicted probability distribution matches the true labels. Used for: Classification problems, especially binary and multiclass (often with softmax). What is Hinge Loss? Give the formula, it's behavior, what it does, and what it's used for Behavior: If the prediction is correct and beyond the margin, loss is 0. Otherwise, it increases linearly. What it does: Encourages the model to make not just correct predictions, but with a margin (confidence). Used for: Binary classification with models like Support Vector Machines (SVMs). What is Squared Hinge Loss? Give the formula, it's behavior, what it does, and what it's used for Behavior: If the prediction is correct and beyond the margin, loss is 0. Otherwise, increases more sharply for predictions that are wrong or too close to the margin. What it does: Encourages the model to make not just correct predictions, but with a margin (confidence). The margin is squared Used for: Also in SVMs, but with stronger penalty for violations. The root of supervised learning Given some function that classifies our data, minimize a given loss function. Pros and Cons of Linear Classifiers Pros: -Very simple, and a lot of things are surprisingly linear -Fast to train Cons: -Can only classify linear separable data Limitations of a linear classifier Can only classify linear separable data Neurons as a Metaphor (Mathematically) A neuron takes the weighted sum of the inputs plus a bias, then passes the result through an activation function (such as a step function, sigmoid, or ReLU) to produce the output. Name the neuron activation functions covered in lecture 1) Step Function 2) Sigmoid 3) ReLU Things that Computer Vision neural networks struggle with - Viewpoint Variation (Different Angles) - Illumination Conditions - Scale Variation - Deformation (Ex. A cat being in a weird position) - Background Clutter - Occlusion (Part of an object is hidden) - Intra-class variation (Ex. Not Every Chair is the same) Convolutional Neural Networks A type of feed-forward artificial neural network where the connectivity between neurons is designed to respond to overlapping regions of the input (called local receptive fields). This allows the network to capture spatial patterns and use surrounding context to better understand the data. Inspired by the organization of the visual cortex in animals. Different objects of Computer Vision (Image Recognition Tasks) Classification: What is this image of? Localization: What part of this image classifies this image Object Detection: Labeling objects in the image (Ex. If it's an image of a cat and a dog, detect which one is a cat and which one is a dog) Semantic/Instance Segmentation: Which pixels are what? Is this pixel a cat or a dog? What is the intuition behind a convolutional layer? Create filters that capture specific features or elements of an image Examples of features/elements of an image: -Edges -Textures -Shapes -Patterns What happens in a convolutional layer during forward propagation in a neural network? Each filter scans across the input image (or the output of the previous layer), computing a dot product at each location to detect specific features. The resulting values form a feature map, where higher activations indicate a stronger presence of the feature (e.g., an edge) at that location. For example, if a convolutional layer is responsible for capturing edges, it will produce higher activations in regions where edges are present. What is a feature map? A feature map is the output of a convolutional layer after a filter has been applied to the input. It is a 2D grid of numbers where higher values indicate a stronger presence of the feature the filter is designed to detect, at that specific location in the input. What is zero padding? A technique where extra rows and columns of zeros are added around the border of the input image or feature map before applying a convolution, so the filter doesn't go off the edges. For edge coverage and size preservation What is a local receptive field? When is it used? A local receptive field refers to the specific region of the input that a single neuron or filter in a convolution layers sees. Essentially, it's a small region of the image that the filter processes at a time, helping to focus on specific features without oversimplifying. What is stride length? What does it mean when it's very low? very high? Stride length refers to how far the receptive field (filter) moves across the input during a convolution operation. Very low stride: Processes small sections at a time. (unnecessary computation and overfitting) Very high stride: Skips over large sections. (Misses important details and underfitting) What is Step Function An activated function that: outputs 0 or 1 depending on a threshold (not used much today since it is non-differentiable). What is ReLU? Rectified Linear Unit. It is a piecewise activation function where: If x < 0 -> return 0 Else -> return x Widely used for its simplicity and efficiency. What is sigmoid? An activation function defined as Sigmoid(x) = 1/ (1+ e^-x) Smooth, S-shaped curve between 0 and 1; useful for probability outputs but can suffer from vanishing gradients. Step Function vs Relus vs Sigmoid Step Function: -Discontinuous and outputs 0 or 1. -Not commonly used today due to lack of differentiability. -Very basic, and not ideal for backpropagation. Relus: -Faster to compute than Sigmoid -Reduced Likelihood of vanishing gradients (for positive inputs) -Outputs zero for negative inputs (introducing sparsity) Sigmoid: -Smooth and bounded output between 0 and 1 -Useful for probabilities and binary classification -Monotonic (Smooth, continuous curve) but can lead to vanishing gradients What is Dilated Convolution? A dilated convolution is a variant of the standard convolution where the filter skips over pixels, increasing the receptive field without increasing the number of parameters. For example, with a dilation factor of 2, the filter will sample every second pixel from the input, rather than using directly adjacent pixels. This means, if it's a 3×3 filter with dilation 2, it effectively operates over a 5×5 region instead of just a 3×3 region. Dilated Convolution vs Normal Convolution Dilated: -Less computationally expensive -Better for capturing global features -Used in tasks like image segmentation and audio processing Normal: -More computationally expensive -Better for capturing local features -Commonly used in initial layers of networks What happens with each convolutional layer? Specifically in the beginning of processing, middle of processing, and towards the end of processing. Each convolutional layer learns to detect increasingly specific features at different levels of abstraction. Beginning/Low Level: Captures basic details such as edges, textures, and color gradients. Mid/Intermediate Level: Detects more complex features like parts of objects (e.g., eyes, ears, and noses in face recognition). End/High Level: Recognizes entire structures or objects, such as faces, animals, or other large patterns. What is a pooling layer? When is it used? What are it's benefits? A pooling layer reduces the spatial dimensions of the input by aggregating values from neighboring pixels (e.g., using max or average pooling). It is used after a convolutional layer to suppress noise and reduce dimensionality, while preserving the most important information (as highlighted by the convolutional layer's feature detection). Benefits: -No need for weights (non-learnable operation) -Reduces computational load -Increases robustness (helps the model perform well despite small variations in the input) Max Pooling vs Average Pooling Max Pooling: -Takes the maximum value from the region -useful for retaining the most prominent feature Average Pooling: -Takes the average value from the region -provides a smoother representation of the features What is a Fully Connected Layer? The layer responsible for classification after the feature learning process in a Convolutional Neural Network It flattens the output from the previous layers. connects every neuron to every neuron in the next layer. Then finally uses softmax() (for multi-class classification) to produce class probabilities. What happens if the batch size is too big? too small? Too big: It will underfit-- Fails to capture the underlying patterns in the data thus generalizing more broadly Too small: It will overfit-- Learns too much noise or irrelevant details thus losing the ability to generalize What does dropout rate mean? When is it used? Why use it? The dropout rate is the probability that a neuron (along with its connections) is randomly ignored (dropped) during training only. This forces the network to not rely too heavily on any one neuron, encouraging it to learn more robust, generalized features. You use it to: -Reduces overfitting -Makes the model more resilient and generalized -Encourages the network to learn redundant, distributed representations What does it mean when a drop out rate is too high? too low? A higher dropout rate will force the network to not rely too heavily on any one neuron. This will prevent overfitting and make the process go faster. But, too high and it will underfit. A lower dropout may cause reliance too heavily on any one neuron. This may lead to overfitting and slower processing Describe the training process for a CNN/NN Show the network n pictures, where n is your batch size Use the backprop alg, but randomly pick some number of neurons to participate (based on your dropout rate) Repeat until the network loss stops decreasing What is the backpropagation algorithm? Backpropagation is how a neural network learns —it starts with a random number of weights, calculates the error, works backward to see which weights caused it, and updates them to improve future predictions. Continue this process until loss stops decreasing. When do we stop training? When loss stops decreasing How to build a neural network 1) Set up the input and output layers. 2) Determine which hidden layers to use and when to use them 3) Choose the number of hidden layers, number of nodes, and activation functions for each layer. 4) Use backpropagation to train and optimize the network. What are parameters in a network? Number of connections What is transfer learning? Using a pre-trained model, fine-tune it to a new, but related task with a smaller dataset. If the pre-trained model doesn't have the right categories, you adjust or replace the final layers, transforming it into a supervised learning problem. What are GANs? Generative Adversarial Networks used to generate realistic images from a Generator -> Discriminator process. The generator takes some noise, and runs it through a bunch of filters until it has an image. The discriminator takes the image itself and compresses it and then determines whether it's real or fake. If fake, it sends the image back to the generator. If 'real', it outputs the image How do we compress images? What about reconstructing images? Auto-encoders handle both. They are composed of both encoders and decoders. encoders for the compression. decoders for the reconstruction. What are auto-encoders? How do they work? Why are they useful? Auto-encoders learn to compress input data (images) into a latent space vector using an encoder, and then reconstruct the original data using a decoder. They are a form of unsupervised learning and are excellent for learning meaningful internal features of the data — even without labeled examples. They are useful for: - Image Compression - Denoising - Semantic Segmentation - Data Generation -Learning weights without labeled data (Feature Learning) What is a Deconvolutional Layer Opposite of a convolutional layer. They take a smaller feature map (like a latent space vector) and increase its spatial dimensions, effectively "upsampling" the data to reconstruct a larger image or feature map. What is an Unpooling Layer Opposite of a pooling layer. They increase the spatial dimensions of the input by placing values back into their original locations — often using the indices saved during pooling (e.g., max pooling). Explain the process of encoding. decoding? The encoder uses convolutional and pooling layers to extract and compress important features from the input, storing the result in a latent space vector The decoder then takes this latent vector and uses deconvolutional (transposed convolution) and unpooling layers to reconstruct the original input as closely as possible What is the correlation between Game Theory and a GAN? GAN is a game with two agents: The Generator and Discriminator. It is a zero-sum game because if the Generator wins, the Discriminator losses and vice versa. Convergence happens when we reach a mixed strategy nash equilibrium for the discriminator What happens if the discriminator gets much better than the generator Vanishing Gradient problem. The discriminator becomes so good that it easily distinguishes fake from real, giving the generator very little feedback (gradient) to learn from. As a result, the generator stops improving and training fails to converge. What is the vanishing gradient problem in a GAN? The discriminator becomes so good that it easily distinguishes fake from real, giving the generator very little feedback (gradient) to learn from. As a result, the generator stops improving and training fails to converge. How to combat the vanishing gradient problem in a GAN? -Switch to the non-saturating heuristic -Limit the discriminator somehow -Try to balance training Why would we want to force convergence in a GAN? How can we do that? We want to force convergence in a GAN so that the generator can produce realistic outputs that successfully fool the discriminator. Without convergence, training can become unstable or collapse. How we can do that: -Add a regularization term to reduce oscillations and keep parameters near a moving average. -Use soft and noisy labels to prevent the discriminator from becoming too confident. -Use DCGAN or other hybrid architectures with stable design patterns. -Apply stabilization tricks from reinforcement learning (e.g., experience replay, gradient penalties). -Use advanced optimizers like Adam for smoother updates. When would Non-Convergence occur in a GAN? And why? Non-convergence can occur when the generator and discriminator continuously try to outsmart each other, causing instability in training. This happens because the objective function is non-convex and both networks are updating simultaneously, making it hard to reach a stable nash equilibrium. What are Latent Space Vectors? Vector Embeddings of Images created by neural networks to compress images What is Mode Collapse? When does it occur? Why is it a problem? Mode collapse is when the generator produces limited or repetitive outputs, even though the real data is diverse. It occurs when the generator finds a few outputs that consistently fool the discriminator, so it keeps generating those instead of exploring the full range of possibilities. It is a problem because: - The generator fails to generalize - The GAN doesn't learn the full distribution of the data - You lose diversity in generated samples What are conditional GANs? A Conditional GAN adds a guiding condition (like a label) to both the generator and discriminator, enabling more controlled and specific data generation. Conditional GANs vs Traditional GANs Conditional GANs (cGANs) allow you to control what the generator produces by providing an additional input condition (like a label or class). Example: You can generate a realistic image of a cat by conditioning the GAN on the label "cat." Traditional GANs generate data without any control — they simply try to produce realistic outputs based on the training data, with no guidance or labels. What model may we use to support text to image? Why? Conditional GANs, because they allow generation based on labeled or auxiliary input, such as text descriptions. The text acts as a condition, guiding the generator to produce images that match the content of the text. How does face aging work using deep learning? Face aging works by encoding an image into a latent space vector that includes features like age. Since latent space is continuous and structured, we can manipulate the age-related dimensions of the vector to make a person look older or younger. Then, the modified vector is passed through a decoder or generator to produce the aged or de-aged face. How do we pass text to neural networks? Word Embeddings What are word embeddings? Dense vector representations of words that capture their meanings and relationships based on context and usage. They allow neural networks to process words as numbers, preserving semantic similarity (e.g., king and queen will have similar vectors) True or False Word embeddings preserve semantic similarity of words True How could we check the similarity between two different word embeddings? Cosine Similarity: dot(A,B) / (||A|| ||B||) What are some popular word embedding models? -Word2Vec: captures local context well -GloVe: captures global statistical information (How King appears near Royal, Queen, Palace, etc) True or False Word Embeddings are an example of Natural Language Processing True What is NLP? How does it work? Natural Language Processing A blanket term of all computational tasks involving human language NLP works because languages have structure-- NLP captures this structure and meaning of language using techniques like word embeddings and neural networks, which process language as vectors. Latent Space Vector vs Word Embedding Virtually the same concept: both are vector representations. Latent Space Vector: vector representation of images (or other data types) Word Embedding: vector representation of words What are some problems in NLP? -Sentences can be ambiguous -Not everyone uses the same grammatical rules or spellings -Word meanings can change -Hard to transform words to a meaningful vector -Sentiment Analysis -Topic modeling -Question Answering -Named Entity Resolution (Figuring out who a phrase is referring to) -Text Summarization What are some affective dimensions a word has for word embeddings? -Synonym/Antonym: Words with similar or opposite meanings (e.g., happy/sad) -Connotation: The emotional or cultural meaning a word carries beyond its literal definition (e.g., slim vs. skinny) -Relatedness: How conceptually close two words are (e.g., doctor and nurse) -Valence: Measures how positive or negative a word feels (e.g., joy = high valence, grief = low valence) -Arousal: Indicates the level of excitement or emotional intensity a word provokes (e.g., explosion = high arousal, calm = low arousal) -Dominance: Reflects the sense of control or power associated with a word (e.g., command = high dominance, submissive = low dominance) What is Word2Vec? How does it work? A self supervised model used to generate word embeddings. It works by training on large texts using an 'unrelated' task. This helps capture semantic relationship between words. Examples: Skip-Gram and CBOW What is Skip-Gram? The opposite of CBOW A technique in Word2Vec to predict neighboring words based on a given target word What is Continuous Bag of Words (CBOW)? The opposite of Skip-Gram A technique in Word2Vec to predict a target word based on its neighbors/context What is SoftMax? SoftMax is a function used to convert regular vectors into vectors of probabilities. It transforms a vector of raw scores (logits) into probabilities that sum to 1, making it useful for classification tasks. It is frequently used in neural networks due to easy differentiation, allowing for effective training via backpropagation. How can we get the context of words while simultaneously capturing the different kinds of words? Word Embedding. Specifically, having a word embedding for both the center word (in Skip-Gram) and the context words (in CBOW) How do we calculate the loss/probability of the output word given the context? Give the name, formula and why we use this formula Name: Cross-Entropy Loss using SoftMax p(o | c) = e^(dot(u, v)) / Summation(dot(u, v), for all possible output words) o = output c = context u, v = word embeddings for the output and context respectively We use this formula because it is easy to differentiate (take the derivative) and is crucial for efficient training of neural networks through backprop Explain the necessary layers of a NN to predict words 1) Input Layer 2) Word-Embedding Matrix (Lookup Table) --Captures semantic meaning by mapping words to dense vectors 3) Hidden Layer --Embeds Center Words and computes the relationship between the center and context words. 4) Word-Embedding for context words. --Allows context words to interact with the center words 5) Output Layer (Softmax) --Converts scores into probabilities How do we train a word embedding model? Maintain one large vector that includes each word's context vector and center-word vector. Scan the text (corpus), and at each position, use the model to predict a word based on its context (or vice versa). Use Gradient Descent to update the vectors by minimizing the loss (Mainly Cross-Entropy Loss using SoftMax) How do we get the weights for word embedding? We initialize a word embedding matrix (usually randomly), where each row corresponds to a word in the vocabulary. To get the embedding for a word, we multiply a one-hot vector of that word by the embedding matrix—this effectively selects the row (vector) for that word. How can word embeddings be used to find analogies? Through simple vector arithmetic. For example: To solve King is to Man as Queen is to ? Compute: King_Vec - Man_Vec + Queen_Vec. If the word embeddings capture semantic relationships well, the result will be close to Woman_Vec. What is the relationship between word embeddings and GANs Word embeddings enable the training of Conditional GANs (cGANs) by providing text or attribute information as input. Conditional GANs use these embeddings as labels or conditions to generate specific outputs. Example: Smiling Woman - Neutral Woman + Neutral Man = Smiling Man What is GloVe GloVe (Global Vectors) is a count-based model: it learns embeddings by factorizing a matrix built from global word co-occurrence counts across the entire corpus. This enables us to capture global statistical information (e.g., how often "king" appears near "royal", "queen", "palace", etc.) What is an LLM? How does it work? Large Language Model It is an AI system trained on large amounts of text data to perform NLP tasks. It uses neural networks-- specifically transformer architectures. It learns through training, and makes predictions through word embeddings and attention mechanisms. What does an LLM learn during training? It learns patterns, grammar, meaning, and structure of language to generate human-like text and perform tasks such as translation, summarization, question answering. (NLP) How do you steal a model? Model Distillation What is Model Distillation? What is it used for? Model Distillation is the process of using the output of an already trained model to train a smaller model. It is used to steal the weights of another model since once a network is trained, most of the hard work is already done. OpenAI accuses DeepSeek of doing this What measurements do we use to evaluate intelligence, and how well do LLMs perform on each? 1) Knowledge - Very Good LLMs excel at retrieving and presenting factual information. [Show More]

Last updated: 6 months ago

Preview 5 out of 60 pages

Loading document previews ...

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$30.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

May 29, 2025

Number of pages

60

Written in

All

Additional information

This document has been written for:

Uploaded

May 29, 2025

Downloads

0

Views

57