ISYE 6414 Final Exam Review 2022 with complete solution

Least Square Elimination (LSE) cannot be applied to GLM models. Ans***False - it is applicable but does not use data distribution information fully.

In mul

...

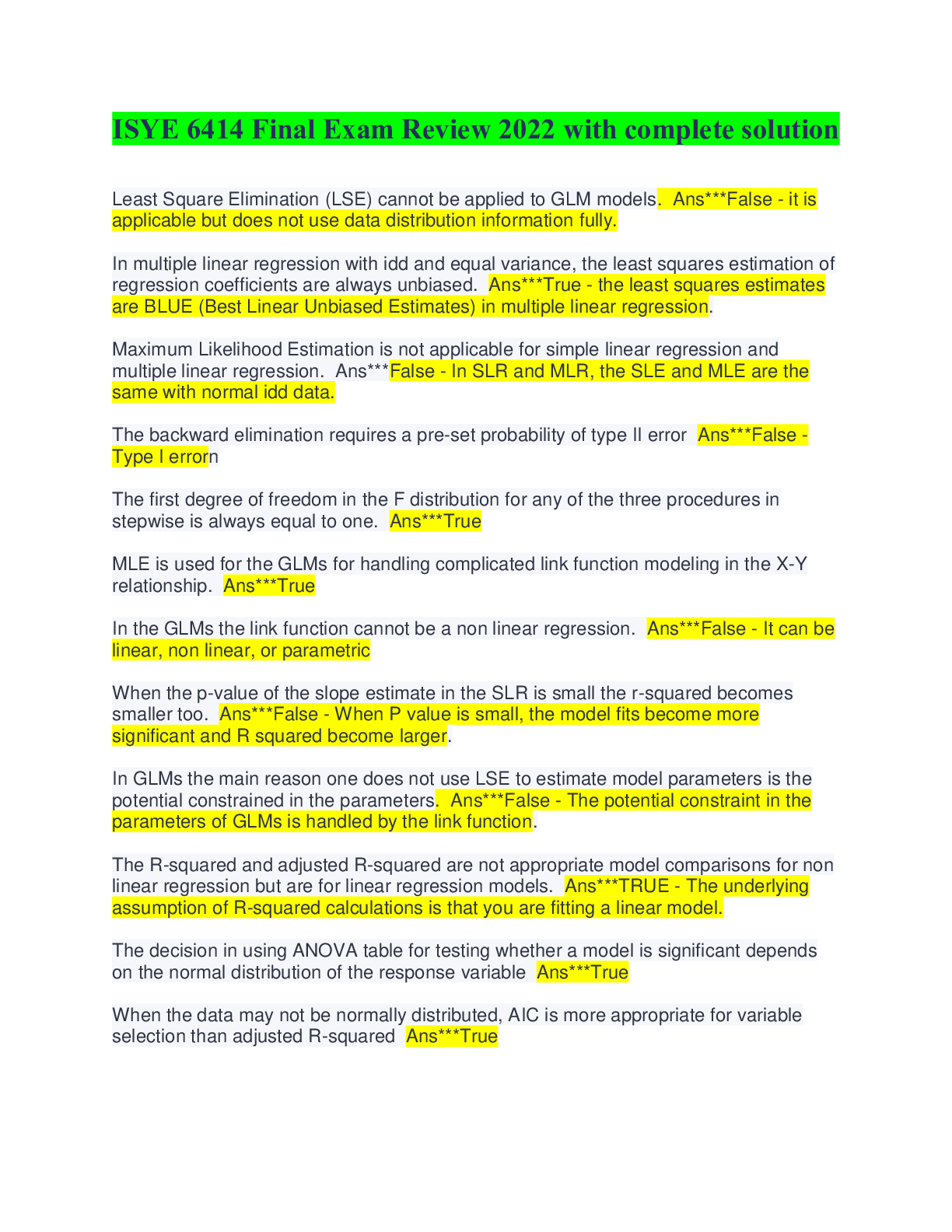

ISYE 6414 Final Exam Review 2022 with complete solution

Least Square Elimination (LSE) cannot be applied to GLM models. Ans***False - it is applicable but does not use data distribution information fully.

In multiple linear regression with idd and equal variance, the least squares estimation of regression coefficients are always unbiased. Ans***True - the least squares estimates are BLUE (Best Linear Unbiased Estimates) in multiple linear regression.

Maximum Likelihood Estimation is not applicable for simple linear regression and multiple linear regression. Ans***False - In SLR and MLR, the SLE and MLE are the same with normal idd data.

The backward elimination requires a pre-set probability of type II error Ans***False - Type I errorn

The first degree of freedom in the F distribution for any of the three procedures in stepwise is always equal to one. Ans***True

MLE is used for the GLMs for handling complicated link function modeling in the X-Y relationship. Ans***True

In the GLMs the link function cannot be a non linear regression. Ans***False - It can be linear, non linear, or parametric

When the p-value of the slope estimate in the SLR is small the r-squared becomes smaller too. Ans***False - When P value is small, the model fits become more significant and R squared become larger.

In GLMs the main reason one does not use LSE to estimate model parameters is the potential constrained in the parameters. Ans***False - The potential constraint in the parameters of GLMs is handled by the link function.

The R-squared and adjusted R-squared are not appropriate model comparisons for non linear regression but are for linear regression models. Ans***TRUE - The underlying assumption of R-squared calculations is that you are fitting a linear model.

The decision in using ANOVA table for testing whether a model is significant depends on the normal distribution of the response variable Ans***True

When the data may not be normally distributed, AIC is more appropriate for variable selection than adjusted R-squared Ans***True

The slope of a linear regression equation is an example of a correlation coefficient. Ans***False - the correlation coefficient is the r value. Will have the same + or - sign as the slope.

In multiple linear regression, as the value of R-squared increases, the relationship

between predictors becomes stronger Ans***False - r squared measures how much variability is explained by the model, NOT how strong the predictors are.

When dealing with a multiple linear regression model, an adjusted R-squared can

be greater than the corresponding unadjusted R-Squared value. Ans***False - the adjusted rsquared value take the number and types of predictors into account. It is lower than the r squared value.

In a multiple regression problem, a quantitative input variable x is replaced by x −

mean(x). The R-squared for the fitted model will be the same Ans***True

The estimated coefficients of a regression line is positive, when the coefficient of

determination is positive. Ans***False - r squared is always positive.

If the outcome variable is quantitative and all explanatory variables take values 0 or

1, a logistic regression model is most appropriate. Ans***False - More research is necessary to determine the correct model.

After fitting a logistic regression model, a plot of residuals versus fitted values is

useful for checking if model assumptions are violated. Ans***False - for logistic regression use deviance residuals.

[Show More]